The reliability crisis

“If we can’t ship safely, we aren’t shipping at all,” Cal Henderson wrote in our #announce-devel channel.

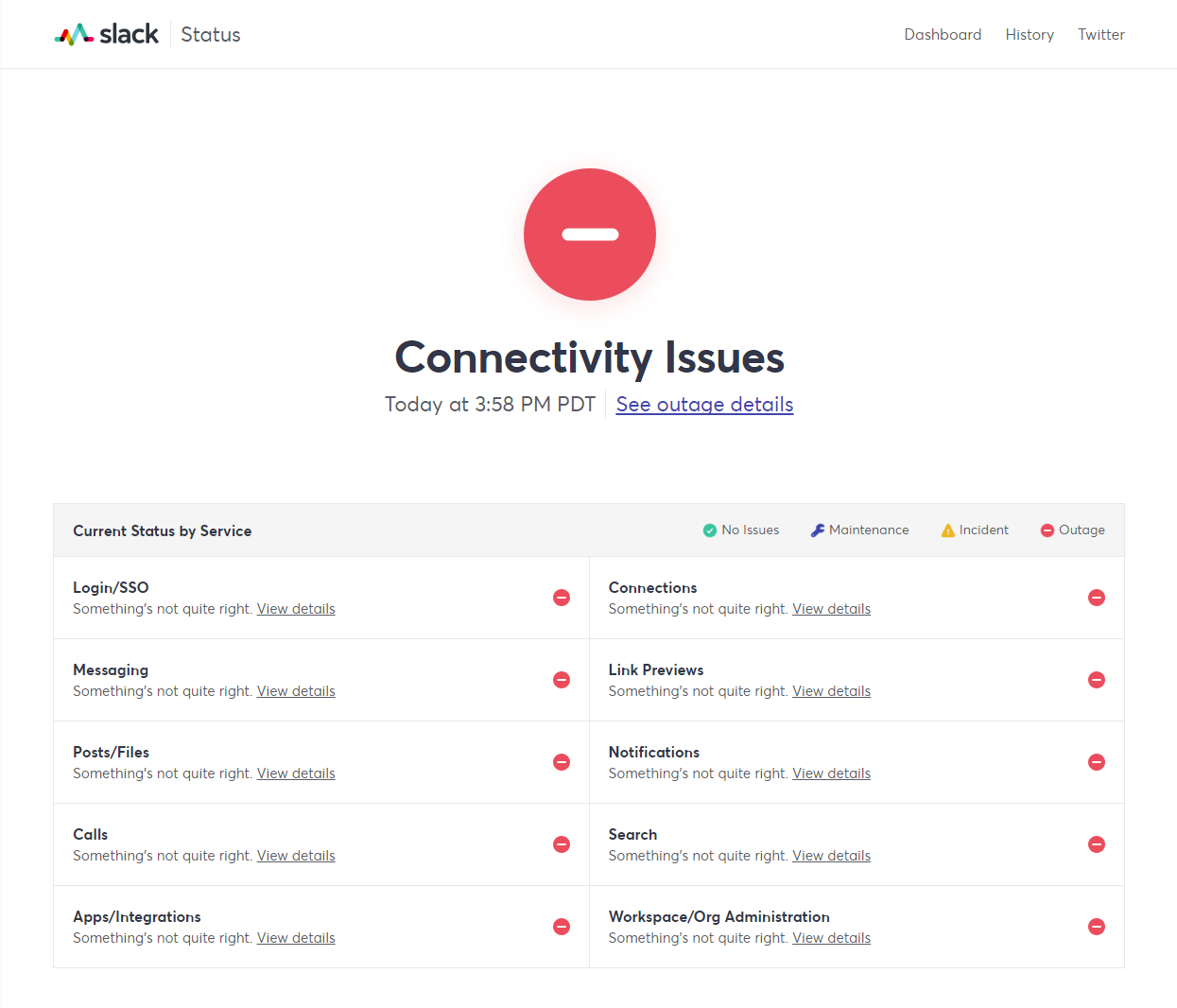

It was September 2018. We had just recovered from our second major self-inflicted outage in a period of weeks. Slack – after experiencing an extended period of incidents and this most recent sequence of outages – was back up. But we weren’t in a position to release any updates to it. We couldn’t be certain if it would break again.

As a company, we prided ourselves on being a reliable tool for our customers. People trusted us with their data and relied on us to be stable so they could do their work. As an engineering team, our reputation was built on rapidly scaling and continuously improving Slack while maintaining that reliability.

For the first time, we couldn’t follow through on that promise. We’d let our customers and ourselves down, and our CTO had to stop the line so we could fix the situation we were in.

The Verge wrote poems to mark our outages.

How we got here

In the 80s and early 90s, software came in a box. First on floppy disks, then CD-ROMs. You went to the store, bought it, brought it home, and installed it on your computer. Most software, once installed, didn’t change. You got what you paid for.

This had upsides: the software always worked when you ran it. You weren’t vulnerable to the unpredictable weather of networks, service outages, or randomly introduced bugs from updates you didn’t ask for. But it also meant software didn’t improve. Shipped bugs festered, and new content and features weren’t forthcoming until the next box shipped, maybe in a year or two. Maybe never.

By the late 90s, “the cloud” had arrived. Software still sometimes shipped on a disc, but was updated thereafter with downloaded updates. By the mid 2000s, software-as-a-service (SaaS) was becoming the dominant paradigm. Software was no longer delivered on a physical artifact, it was continuously released on a server on the internet. This had tremendous upsides: the software kept improving, expanding, and (usually) getting faster. But it also meant that the software continually changed, and could become unstable if those changes had bugs or the service had scaling issues.

Slack was tailor-made for the software-as-a-service paradigm. Not only did this give us an incredible ability to constantly improve and evolve the product, it gave us a shockingly good business model. Once you started paying for Slack, you kept paying for it as long as you kept using it. Since people largely found Slack essential once they started using it, business was very good. Our customers depended on Slack for a huge swath of their daily activity. Without it, they were left deaf, dumb, and blind when it came to the inner workings of their organization.

But Slack had grown a lot since we started. The product became more complicated, with dozens of features added since the original version. The fleet of servers we ran the product on grew massively as we added customers, services, and databases. The engineering team swelled by two orders of magnitude, from 6 engineers in early 2013 to more than 600 by 2018. Most of those engineers were focused on expanding the capabilities and footprint of the core Slack product and platform.

Thanks to this growth, we were shipping far more code to far more services in 2018 than we had ever anticipated. Our codebase sprawled out to millions of lines across dozens of repositories, deployed continuously to hundreds of servers, with desktop and mobile apps installed on millions of devices.

However, our processes and systems lagged this growth. We had grown whole departments dedicated to recruiting, hiring, and onboarding our next generation of employees. We had hired layers of managers to facilitate the useful direction and oversight of all the new staff. We tried to build repeatable processes and document how everything worked. Despite this, there was a lot of confusion and gaps in knowledge when it came to understanding Slack as a whole. We hadn’t figured out how to compartmentalize and subdivide ownership of that whole to make sure nothing fell through the cracks.

Things started breaking. Any given outage had different characteristics and root causes. Sometimes a network configuration change. Sometimes a scaling issue as demand outstripped our ability to expand our server footprint. Sometimes inefficient code in a hot codepath. Oftentimes an errant code change triggering a cascade of issues.

We had done a great deal of work to allow a given Slack instance to scale to the largest companies in the world. We were still grappling with how to scale how we built and released software across our growing engineering staff to the increasingly large number of customers who wanted it.

Many of our emergent issues pointed to a lack of determinism and automation in our CI/CD (continuous integration / continuous deployment) workflow. We relied more on manual code review and manual build testing than we should. This in turn relied on sparsely documented areas of tribal knowledge. We had good test coverage in some areas, but none in others. Worse, we had flakey tests for several parts of the code, which devs learned to disregard.

We relied on a somewhat fragile build pipeline that sporadically failed, making devs distrust its output. We deployed using a set of semi-manual steps paired with anxious graph-watching. If something went wrong, rollbacks were not always straightforward. Major outages became a major headache, not just for our customers who lost the tool they needed for their jobs, but because they required disruptive, stressful efforts to remedy by a wide range of our engineers and ops staff.

The camel's back

Exacerbating our issues, we were in the midst of rewriting large swaths of our codebases from legacy programming languages and techniques to more modern approaches that suited the scale and complexity that Slack had grown into.

In our web and desktop apps, we were rewriting our codebase from a dense bundle of homegrown JavaScript held together with a patchwork of small libraries to the React and Redux framework that was gaining traction at the time. This promised improved app performance and a more easily extensible codebase. However, while the rewrite was underway we had to maintain both the old and new code, and ship them together while the migration proceeded. This was not without its challenges.

On the backend, we were migrating much of our PHP code to Hacklang. This promised improved developer productivity and type safety, but came with a steep learning curve. Far fewer engineers were familiar with Hack than PHP and had to learn on the job. It was also a grind, as the many millions of lines of PHP formed the bulk of our primary code repository.

Finally, we had recently drastically reorganized our database layer, adopting Vitess to address the scale and new data relationships we needed for the Enterprise Grid and Shared Channels features. As we migrated from MySQL, we inevitably ran into bottlenecks and scaling hiccups as we rewrote our data access patterns across the apps to take advantage of Vitess.

Meanwhile our build, ops and infrastructure teams were scaling and reorganizing all the subsystems that allowed us to package and release all this software to the world. Their job was to stay ahead of the growth of the teams they supported and the demand from our customers. This was a thankless, seemingly endless task.

So here we were, growing at breakneck speed, trying to ship ambitious new features, and rewriting the code that Slack ran on, all at once. We were building the metaphorical airplane while flying it.

We had reached a tipping point. Too many cultural, systemic and technical issues had piled up, and the consequences were showing up in our service reliability. Incidents had become too common, and severe outages unacceptably frequent.

The price of reliability

To add a further important wrinkle to all this: our outages were costing us real money. Not just from disruption or extra work, but because we backed up our promises to customers with an agreement that we would refund them for the lost time.

We promised 99.99% uptime to our users. This is what’s known as “4 9s of reliability” in the industry. When measuring the uptime of a service, you can calculate the availability as a percentage. In our case, we promised 99.99% in our Service Level Agreement — this equates to no more than 4.3 minutes of downtime per month.

For comparison, “3 9s” or 99.9% allows for 44 minutes of downtime per month (10x more than 4 9s), whereas “5 9s” or 99.999% would allow for only 26 seconds of downtime per month (10x less than 4 9s). Adding fractions of a percentage to your uptime guarantees becomes increasingly difficult and expensive. And no service achieves 100% availability, given enough time.

In typical Slack fashion, we didn’t just promise to refund our customers for outages, we promised to refund them at 100x the cost of the lost time. From our (now-archived) legal agreement:

If we fall short of our 99.99% uptime guarantee, we’ll refund you 100 times the amount your workspace paid during the period Slack was down in the form of “Service Credits”. When you reach your renewal date, or if you add new members, we’ll first draw from your credit balance before charging you.

What did this mean, practically? If we went down for an hour during an outage, we would give all our customers on the Plus and Grid plans 100 hours’ worth of credits. If a customer was paying us $10,000 a month, 100 hours was worth about $1,332 in credits. For one customer, for one outage. This multiplied rapidly across thousands of customers and multiple outages.

Stewart addressed this on an earnings call shortly after this period of instability (note this is a transcript of an answer to a live question and not a prepared remark):

“The last thing I want to note though, for the service credits, there are a bunch of things that we do that are unusual besides what Allen mentioned, which is the payout ratio. One is, customers don't have to request it, we just proactively give it. And almost no outages, I don't know every detail for this quarter but almost no outages affect all customers, in fact most of them affect like 1% or 0.5% or 3% of customers in any given time. And we give those service credits to every customer even if they were not specifically affected. So those policies are outrageously customer-centric, which is part of our background and our orientation. And that is one of the reasons you see that effect. It's not necessarily proportionate to the outage, because if we had the same SLA as Salesforce or Microsoft or any of our peers in the industry, we wouldn't have paid out anything because we would have hit the 3 9s they're committed to.”

We had set ourselves up for this pain by expecting very high standards of ourselves – 99.99% uptime compared to our peers’ 99.9% – and by backing that up with a financial commitment to do right by customers. As Stewart describes it, this was an “outrageously customer-centric” approach which pushed the industry to do better. It followed our fair billing policy which only charged customers based on the number of people at their company who were actually using Slack. For us it was just the right thing to do.

We usually met and often exceeded our guarantees, frequently offering months with 100% uptime. This engendered trust from our existing teams and promised a reliable investment for prospective customers.

At least, it did until we stopped meeting our uptime guarantees.

Carrier-grade

Slack had arrived in a curious place for a service that started as a low-stakes tool for communicating with your colleagues. Early customers used it to chat, send gifs and jokes, and share files more quickly than email allowed. Over time customers began to depend on it for their critical business operations. In some industries that adopted Slack, the timeliness and consistency of Slack messages became essential to their work in logistics, healthcare, dispatch and so on. Eventually, Slack became a load-bearing part of the operations of some of the biggest companies in the world. Without it, the daily work of a company came to a standstill, with real-world consequences.

As we grew, we had built and communicated our commitments around being ready to handle sensitive communications and maintaining “Enterprise-grade” security and compliance. But we had reached a new level of expectations from our customers to not just be solid and secure, but “carrier-grade”.

Carrier-grade refers to the reliability of the fundamental communication networks the modern world depends upon. Any service can only be as reliable as the infrastructure it runs on, so things like telecommunications infrastructure and networks are designed and built to be as reliable as possible. This includes mechanisms for redundancy and resiliency in the face of infrequent or unpredictable events, and the ability to smoothly handle surges in traffic. Carrier-grade aims to reach the lofty heights promised by “5 9s” of availability (though in practice only achieves this over shorter periods of time).

This was an exceedingly – perhaps impossibly – high bar to meet. But it became our north star as we began to chart our way out of the mess we found ourselves in.

Digging out

On the day Cal stopped the line, we set up a task force of top engineers and managers to address the underlying issues that had accumulated. We set aside all other ongoing work and put all hands on deck to address the crisis. Our response covered four primary areas: deploys, tests, reviews, and build automation.

First, we determined the bare minimum set of systems that needed to be ready to deploy in case of an emergent issue. This would not be used to release features, only to respond to incidents. These were overseen by a set of key engineers who had to authorize any deploy that went out. This was a dramatic reduction from the rolling semi-automated deploys that would otherwise have occurred every hour or so. But it allowed us to resume a basic posture of release readiness should the need arise while we focused on our other issues.

Second, we recognized that our approach to testing needed a reboot. Tests allow engineers to write bits of code that assert how something should work. When a build is run through them, tests flag whether something has broken, either in the intended feature area or in something unrelated. When these are reliable, they offer both a safeguard against unintended breakage and cues to the developer about how the code they’re editing is expected to work.

Our tests were a threadbare patchwork at best: some areas well tested, many untested, still others with unreliable “flaky” tests that didn’t deterministically indicate whether the code was working properly. This was a side-effect of our product development velocity over the first 5 years, where rapid prototyping, iteration and releases were prioritized over well-tested code. That tradeoff had caught up to us, and we had a fairly deep hole to dig out of.

To address this, test coverage targets were agreed upon and implemented, measuring what percentage of files or functions would have accompanying tests – usually 30-70% of a given area of the codebase. These goals were fanned out across all the working groups in engineering to prioritize coverage for the code they knew best. Live dashboards were set up to track progress toward these goals.

Many product engineers who had been busily writing features now became test engineers, spending weeks writing and refining test suites for those features and the backlog of untested files. For many, this was a welcome chance to solidify and improve the safety of their code. Those who had correctly pointed out over the years that our spotty test coverage was a liability were happy to fill in the gaps and set up durable approaches to testing. Others grudgingly recognized that it was a best practice we were overdue to adopt.

Third, code reviews – in which every changeset was reviewed by another engineer before being merged – were temporarily beefed up. We now had to have two reviews for each changeset, one from a peer and one from a subject matter expert in the area of code being changed. Furthermore, complete manual testing steps for each change now needed to be written and followed before approving a Pull Request.

Previously, any given change only needed one approval to be merged. Depending on the change this review was often cursory at best. A quick `lgtm +1` indicated that someone had at least glanced at your code change. This didn't fly any more. With two reviewers, the social pressure not only of the initial review but the knowledge that a second reviewer would be involved imposed a much higher bar. This showed up in more thorough reviews, more iteration and refinement of the code, and better testing of each change before it was merged. Eventually this would be relaxed back to one review but the overall expectations around code review were reset and led to increased safety.

Finally, the largest area of effort focused on the reliability and automation of our build and deploy pipeline. Up to this point, visibility into emergent issues was not always available, and our tooling around deploys was creaky and not always trusted. Every step of our existing process became a target for automation, from staging the latest code, running tests, deploying dogfood builds for internal testing, and eventually rolling out that build to production. Previously manual steps were refined for consistency and visibility and restructured for automation.

This allowed us to move from a spiky cadence of manually-run deploys to a consistent, always-rolling deploy pipeline that included percentage-based and zonal rollouts, automated alerts, and trustworthy dashboards showing the state of the service and the deploy.

Instant and dependable rollbacks allowed us to rapidly recover from deploy-related incidents – a dramatic improvement over our previous approach of fixing-forward issues. Up to this point, the rollback tools we had were unreliable, so we didn’t use them even when a rollback could have saved customer impact. Now we had a way to instantly undo a faulty deploy, greatly reducing those impacts and allowing us to meet our uptime guarantees.

This huge effort fundamentally reshaped the nature of our build and deploy process, and greatly increased safety and stability. In turn this gave our engineers confidence and reduced friction and anxiety in their development workflow. We had moved from a somewhat ramshackle set of tools and processes that had grown up over time to a solid, tested and trusted toolchain that became the backbone of our ability to continually deploy the service.

These efforts captured the work of hundreds of engineers coordinating to get Slack back where it needed to be. In the meantime product priorities were pushed and feature releases were delayed. Altogether it took over a month to get back to a known-good situation where we could confidently deploy Slack, and several more months for our new processes and systems to stabilize enough for us to get back to the level of productivity we expected.

Never let a good crisis go to waste

In hindsight, the reliability crisis was a blessing in disguise. As Cal Henderson noted when we discussed it recently, “The reliability crisis was actually a period of stability for customers.” Because we focused our efforts inward for a while, we weren’t making dramatic changes to the service. It remained up and undisrupted, and our efforts ensured that our newfound stability persisted.

Slowly, our self-imposed shutdown paid off. Slack became more stable and more scalable. Our processes became more resilient and mature. And the overall professionalism and confidence of our engineering team grew as we rebalanced our efforts between product development, code quality and service reliability. Our high-priority technology migrations, already under time pressure to finish, resumed and in some cases accelerated thanks to the new scaffolding around the code that improved safety, testability and confident deployment.

The reliability crisis arrived at the right time. As we rebounded from it, we were better positioned than ever for the opportunity ahead of us.

Thanks to Cal Henderson, Keith Adams and Mark Christian for their notes on this post.